Two weeks ago, we

discussed Hannah and Eppley's thought experiment. Hannah and Eppley argued that a fundamental theory that is only partly quantized leads to contradictions either with quantum mechanics or special relativity; in particular we cannot leave gravity unquantized.

However, we also discussed that this thought experiment might be impossible to perform in our universe, since it requires a basically noiseless system and detectors more massive than we have mass available. Unless you believe in a multiverse that offers such an environment - somewhere -, this leaves us in a philosophical conundrum, since we conclude that any contradiction in Hannah and Eppley's thought experiment is unobservable, at least for us. And if you do believe in a multiverse, maybe gravity is only quantized in parts of it.

So you might not be convinced and insist that gravity may remain classical. Here I want to examine this option in more detail and explain why it is not a fruitful approach. If you know a thing or two about semi-classical gravity, you can skip the preliminaries.

PreliminariesIf gravity remained classical, we would have a theory that couples a quantum field to classical general relativity (GR). GR describes the curvature of space-time (denoted

R with indices) that is caused by distributions of matter and energy, encoded in the so-called "stress-energy-tensor" (denoted

T with indices). The coupling constant is Newton's constant

G.

In a quantum field theory, the stress-energy-tensor becomes an operator that acts on elements of the Hilbert-space. But in the equations of GR, one can't just replace the classical stress-energy-tensor with a quantum operator, since the latter has non-vanishing commutators that the former doesn't have. Since both would have to be equal to a tensor-valued function of the classical background, this will not work. Instead, we have to take the classical part of the operator that is it's expectation value, in some quantum state, denoted as usual by the bra-kets

This is called semi-classical gravity; quantum fields coupled to a classical background. Why, you might ask, don't we just settle for this?

To begin with, semi-classical gravity doesn't actually solve the problems that we were expecting quantum gravity would solve. In particular, semi-classical gravity is the origin rather than the solution of

the black-hole information loss problem. It also doesn't prevent singularities (

though in some cases it might help). But, you might argue, maybe we were just expecting too much. Maybe the answers to these problems lie entirely elsewhere. That semi-classical gravity doesn't help us here doesn't mean the theory isn't viable, it just means it doesn't do what we wanted it to do. This explains a certain lack of motivation for studying this option, but isn't a good scientific reason to exclude it.

Okay, you have a point here. But semi-classical gravity doesn't only not solve any problems, it brings with it a bunch of new problems. To begin with, the expectation value of the stress-energy-tensor is divergent and has to be regularized, a problem that becomes considerably more difficult in curved space. This is a technical problem which has been studied for some decades now, and that actually with great success. While some problems remain, you might take the point of view that they will be addressed sooner or later.

But a much more severe problem with the semi-classical equations is the measurement process. If you recall, the expectation value of a field that is in a superposition of states that are with probability 1/2 here, and with probability 1/2 there, has to be updated upon measurement. Suddenly then, the particle and its expectation value are with probability 1 here or there. This process violates local conservation of the expectation value of the stress-energy-tensor. But this local conservation is built into GR: It is necessarily always identically fulfilled. This means that semi-classical gravity can't be valid during the measurement. But still, you might insist, we haven't understood the measurement in quantum mechanics anyway, and maybe the theory has to be modified suitably during measurement, so that in fact the conservation law can be temporarily violated.

You are really stubborn, aren't you?

So you insist, but I hope the latter problem illuminated just how absurd semi-classical gravity is if you think about a quantum state in a superposition of different positions, eg a photon that went through a beam splitter. Quantum mechanically, it had 50% chance to go this or that way. But according to semi-classical gravity, its gravitational field went half both ways! If the photon went left, its gravitational field went half with the photon, and half to the right. Surely, you'd think there must be some way to experimentally exclude this absurdity?

Page and Geilker's experimentPage and Geilker set out in 1981 to show exactly that, the absurdity of semi-classical gravity with a suitably designed experiment. The most amazing thing about their study is that

it got published in PRL, for the experiment is absurd in itself.

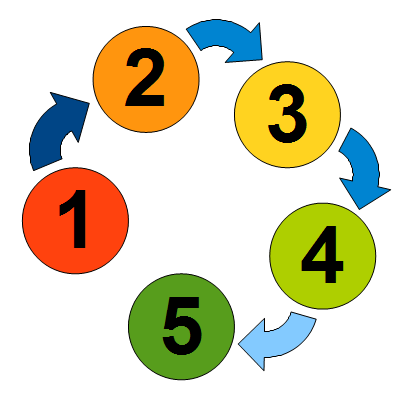

Their reasoning was as follows. Consider you have a Cavendish-like setup, consisting of two pairs of massive balls connected by rods, see image below (you are looking at the setup from above)

The one rod (grey) hangs on a wire that has a mirror attached to it, so you can measure its motion by tracking the position of a laser light shining onto the mirror. The other rod (not shown) connecting the two other balls (blue) will be turned to bring the balls into one of two positions A or B. The gravitational attraction between the balls will cause the wire to twist into one of two directions, as indicated by the arrows.

Or so you think if you know classical gravity. But if the blue balls are in a quantum superposition of A and B, then the gravitational attraction

of the expectation value of their mass distribution on the grey balls cancels, the wire doesn't twist, and the laser light doesn't move.

To bring the grey balls into a superposition, Page and Geilker used a radioactive sample that decayed with some probability within 30 seconds, and about with equal probability within a longer time-span after this. Depending on the outcome of the decay, the blue balls remain in position A or assume B. The mirror moved, they concluded the gravitational field of the balls can't have been the expectation value of the superpositions A and B, thus semi-classical gravity is wrong.

Well, I hope you saw Schrödinger's cat laughing. While the decay of a radioactive sample is a purely quantum mechanical process, the wavefunction is long decohered by the time the rod has been adjusted. The blue balls have no more been in a quantum superposition than Schrödinger's cat ever was in a superposition of dead and alive.

This begs the question then if not Page and Geilker's experiment can be realized de facto. The problem is, as always with quantum gravity, that the gravitational interaction is very weak. The heaviest masses that can be brought into a superposition of different locations, presently molecules with some thousand GeV, still have gravitational fields far too weak to be measurable. More can be said about this, but that deserves another post another time.

BottomlineSemi-classical gravity is not considered a fundamentally meaningful description of Nature for theoretical reasons. These are good and convincing reasons, yet semi-classical gravity has stubbornly refused experimental falsification. This tells you just how frustrating the search for quantum gravity phenomenology can be.