This video is about Roger Penrose’s idea for the beginning of the universe and its end, conformal cyclic cosmology, CCC for short. It’s a topic that a lot of you have asked for ever since Roger Penrose won the Nobel Prize in 2020. The reason I’ve put off talking about it is that I don’t enjoy criticizing other people’s ideas, especially if they’re people I personally know. And also, who am I to criticize a Nobel Prize winner. on YouTube, out of all places.

However, Penrose himself has been very outspoken about his misgivings of string theory and contemporary cosmology, in particular inflation, and so in the end I think it’ll be okay if I tell you what I think about conformal cyclic cosmology. And that’s what we’ll talk about today.

First thing first, what does conformal cyclic cosmology mean. I think we’re all good with the word cosmology, it’s a theory for the history of the entire universe, alright. That it’s cyclic means it repeats in some sense. Penrose calls these cycles eons. Each starts with a big bang, but it doesn’t end with a big crunch.

A big crunch would happen when the expansion of the universe changes to a contraction and eventually all the matter is well, crunched together. A big crunch is like a big bang in reverse. This does not happen in Conformal Cyclic Cosmology. Rather, the history of the universe just kind of tapers out. Matter becomes more and more thinly diluted. And then there’s the word conformal. We need that to get from the thinly diluted end of one eon to the beginning of the next. But what does conformal mean?

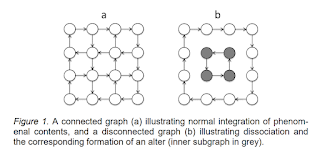

A conformal rescaling is a stretching or shrinking that maintains all relative angles. Penrose uses that because you can use a conformal rescaling to make something that has infinite size into something that has finite size.

Here is a simple example of a conformal rescaling. Suppose you have an infinite two-dimensional plane. And suppose you have half of a sphere. Now from every point on the infinite plane, you draw a line to the center of the sphere. At the point where it pierces the sphere, you project that down onto a disk. That way you map every point of the infinite plane into the disk underneath the sphere. A famous example of a conformal rescaling is this image from Escher. Imagine that those bats are all the same size and once filled in an infinite plane. In this image they are all squeezed into a finite area.

Now in Penrose’s case, the infinite thing that you rescale is not just space, but space-time. You rescale them both and then you glue the end of our universe to a new beginning. Mathematically you can totally do that. But why would you? And what’s with the physics?

Let’s first talk about why you would want to do that. Penrose is trying to solve a big puzzle in our current theories for the universe. It’s the second law of thermodynamics: entropy increases. We see it increase. But that entropy increases means it must have been smaller in the past. Indeed, the universe must have started out with very small entropy, otherwise we just can’t explain what we see. That the early universe must have had small entropy is often called the Past Hypothesis, a term coined by the philosopher David Albert.

Our current theories work perfectly fine with the past hypothesis. But of course it would be better if one didn’t need it. If one instead had a theory from which one can derive it.

Penrose has attacked this problem by first finding a way to quantify the entropy in the gravitational field. He argued already in the 1970s, that it’s encoded in the Weyl curvature tensor. That’s loosely speaking part of the complete curvature tensor of space-time. This Weyl curvature tensor, according to Penrose, should be very small in the beginning of the universe. Then the entropy would be small and the past hypothesis would be explained. He calls this the Weyl Curvature Hypothesis.

So, instead of the rather vague past hypothesis, we now have a mathematically precise Weyl Curvature Hypothesis. Like the entropy, the Weyl Curvature would start initially very small and then increase as the universe gets older. This goes along with the formation of bigger structures like stars and galaxies.

Remains the question how do you get the Weyl Curvature to be small. Here’s where the conformal rescaling kicks in. You take the end of a universe where the Weyl curvature is large, you rescale it which makes it very small, and then you postulate that this is the beginning of a new universe.

Okay, so that explains why you may want to do that, but what’s with the physics. The reason why this rescaling works mathematically is that in a conformally invariant universe there’s no meaningful way to talk about time. It’s like if I show you a piece of the Koch snowflake and ask if that’s big or small. These pieces repeat infinitely often so you can’t tell. In CCC it’s the same with time at the end of the universe.

But the conformal rescaling and gluing only works if the universe approaches conformal invariance towards the end of its life. This may or may not be the case. The universe contains massive particles, and massive particles are not conformally invariant. That’s because particles are also waves and massive particles are waves with a particular wavelength. That’s the Compton wave-length, which is inversely proportional to the mass. This is a specific scale, so if you rescale the universe, it will not remain the same.

However, the masses of the elementary particles all come from the Higgs field, so if you can somehow get rid of the Higgs at the end of the universe, then that would be conformally invariant and everything would work. Or maybe you can think of some other way to get rid of massive particles. And since no one really knows what may happen at the end of the universe anyway, ok, well, maybe it works somehow.

But we can’t test what will happen in a hundred billion years. So how could one test Penrose’s cyclic cosmology? Interestingly, this conformal rescaling doesn’t wash out all the details from the previous eon. Gravitational waves survive because they scale differently than the Weyl curvature. And those gravitational waves from the previous eon affect how matter moves after the big bang of our eon, which in turn leaves patterns in the cosmic microwave background. Indeed, rather specific patterns.

Roger Penrose first said one should look for rings. These rights would come from the collisions of supermassive black holes in the eon before ours. This is pretty much the most violent event one can think of and so should produce a lot of gravitational waves. However, the search for those signals remained inconclusive.

Penrose then found a better observational signature from the earlier eon which he called Hawking points. Supermassive black holes in the earlier eon evaporate and leave behind a cloud of Hawking radiation which spreads out over the whole universe. But at the end of the eon, you do the rescaling and you squeeze all that Hawking radiation together. That carries over into the next eon and makes a localized point with some rings around it in the CMB.

And these Hawking points are actually there. It’s not only Penrose and his people who have found them in the CMB. The thing is though that some cosmologists have argued they should also be there in the most popular model for the early universe, which is inflation. So, this prediction may not be wrong, but it’s maybe not a good way to tell Penrose’s model from others.

Penrose also says that this conformal rescaling requires that one introduces a new field which gives rise to a new particle. He has called this particle the “erebon”, named after erebos, the god of darkness. The erebons might make up dark matter. They are heavy particles with masses of about the Planck mass, so that’s much heavier than the particles astrophysicists typically consider for dark matter. But it’s not ruled out that dark matter particles might be so heavy and indeed other astrophysicists have considered similar particles as candidates for dark matter.

Penrose’s erebons are ultimately unstable. Remember you have to get rid of all the masses at the end of the eon to get to conformal invariance. So Penrose predicts that dark matter should slowly decay. That decay however is so slow that it is hard to test. He has also predicted that there should be rings around the Hawking points in the CMB B-modes which is the thing that the BICEP experiment was looking for. But those too haven’t been seen – so far.

Okay, so that’s my brief summary of conformal cyclic cosmology, now what do I think about it. Mostly I have questions. The obvious thing to pick on is that actually the universe isn’t conformally invariant and that postulating all Higgs bosons disappear or something like that is rather ad hoc. But this actually isn’t my main problem. Maybe I’ve spent too much time among particle physicists, but I’ve seen far worse things. Unparticles, anybody?

One thing that gives me headaches is that it’s one thing to do a conformal rescaling mathematically. Understanding what this physically means is another thing entirely. You see, just because you can create an infinite sequence of eons doesn’t mean the duration of any eon is now finite. You can totally glue together infinitely many infinitely large space-times if you really want to. Saying that time becomes meaningless doesn’t really explain to me what this rescaling physically does.

Okay, but maybe that’s a rather philosophical misgiving. Here is a more concrete one. If the previous eon leaves information imprinted in the next one, then it isn’t obvious that the cycles repeat in the same way. Instead, I would think, they will generally end up with larger and larger fluctuations that will pass on larger and larger fluctuations to the next eon because that’s a positive feedback. If that was so, then Penrose would have to explain why we are in a universe that’s special for not having these huge fluctuations.

Another issue is that it’s not obvious you can extend these cosmologies back in time indefinitely. This is a problem also for “eternal inflation.” Eternal inflation is eternal really only into the future. It has a finite past. You can calculate this just from the geometry. In a recent paper Kinney and Stein showed that this is also the case for a model of cyclic cosmology put forward by Ijjas and Steinhard has the same problem. The cycle might go on infinitely, alright, but only into the future not into the past. It’s not clear at the moment whether this is also the case for conformal cyclic cosmology. I don’t think anyone has looked at it.

Finally, I am not sure that CCC actually solves the problem it was supposed to solve. Remember we are trying to explain the past hypothesis. But a scientific explanation shouldn’t be more difficult than the thing you’re trying to explain. And CCC requires some assumptions, about the conformal invariance and the erebons, that at least to me don’t seem any better than the past hypothesis.

Having said that, I think Penrose’s point that the Weyl curvature in the early universe must have been small is really important and it hasn’t been appreciated enough. Maybe CCC isn’t exactly the right conclusion to draw from it, but it’s a mathematical puzzle that in my opinion deserves a little more attention.

Remains the question how do you get the Weyl Curvature to be small. Here’s where the conformal rescaling kicks in. You take the end of a universe where the Weyl curvature is large, you rescale it which makes it very small, and then you postulate that this is the beginning of a new universe.

Okay, so that explains why you may want to do that, but what’s with the physics. The reason why this rescaling works mathematically is that in a conformally invariant universe there’s no meaningful way to talk about time. It’s like if I show you a piece of the Koch snowflake and ask if that’s big or small. These pieces repeat infinitely often so you can’t tell. In CCC it’s the same with time at the end of the universe.

But the conformal rescaling and gluing only works if the universe approaches conformal invariance towards the end of its life. This may or may not be the case. The universe contains massive particles, and massive particles are not conformally invariant. That’s because particles are also waves and massive particles are waves with a particular wavelength. That’s the Compton wave-length, which is inversely proportional to the mass. This is a specific scale, so if you rescale the universe, it will not remain the same.

However, the masses of the elementary particles all come from the Higgs field, so if you can somehow get rid of the Higgs at the end of the universe, then that would be conformally invariant and everything would work. Or maybe you can think of some other way to get rid of massive particles. And since no one really knows what may happen at the end of the universe anyway, ok, well, maybe it works somehow.

But we can’t test what will happen in a hundred billion years. So how could one test Penrose’s cyclic cosmology? Interestingly, this conformal rescaling doesn’t wash out all the details from the previous eon. Gravitational waves survive because they scale differently than the Weyl curvature. And those gravitational waves from the previous eon affect how matter moves after the big bang of our eon, which in turn leaves patterns in the cosmic microwave background. Indeed, rather specific patterns.

Roger Penrose first said one should look for rings. These rights would come from the collisions of supermassive black holes in the eon before ours. This is pretty much the most violent event one can think of and so should produce a lot of gravitational waves. However, the search for those signals remained inconclusive.

Penrose then found a better observational signature from the earlier eon which he called Hawking points. Supermassive black holes in the earlier eon evaporate and leave behind a cloud of Hawking radiation which spreads out over the whole universe. But at the end of the eon, you do the rescaling and you squeeze all that Hawking radiation together. That carries over into the next eon and makes a localized point with some rings around it in the CMB.

And these Hawking points are actually there. It’s not only Penrose and his people who have found them in the CMB. The thing is though that some cosmologists have argued they should also be there in the most popular model for the early universe, which is inflation. So, this prediction may not be wrong, but it’s maybe not a good way to tell Penrose’s model from others.

Penrose also says that this conformal rescaling requires that one introduces a new field which gives rise to a new particle. He has called this particle the “erebon”, named after erebos, the god of darkness. The erebons might make up dark matter. They are heavy particles with masses of about the Planck mass, so that’s much heavier than the particles astrophysicists typically consider for dark matter. But it’s not ruled out that dark matter particles might be so heavy and indeed other astrophysicists have considered similar particles as candidates for dark matter.

Penrose’s erebons are ultimately unstable. Remember you have to get rid of all the masses at the end of the eon to get to conformal invariance. So Penrose predicts that dark matter should slowly decay. That decay however is so slow that it is hard to test. He has also predicted that there should be rings around the Hawking points in the CMB B-modes which is the thing that the BICEP experiment was looking for. But those too haven’t been seen – so far.

Okay, so that’s my brief summary of conformal cyclic cosmology, now what do I think about it. Mostly I have questions. The obvious thing to pick on is that actually the universe isn’t conformally invariant and that postulating all Higgs bosons disappear or something like that is rather ad hoc. But this actually isn’t my main problem. Maybe I’ve spent too much time among particle physicists, but I’ve seen far worse things. Unparticles, anybody?

One thing that gives me headaches is that it’s one thing to do a conformal rescaling mathematically. Understanding what this physically means is another thing entirely. You see, just because you can create an infinite sequence of eons doesn’t mean the duration of any eon is now finite. You can totally glue together infinitely many infinitely large space-times if you really want to. Saying that time becomes meaningless doesn’t really explain to me what this rescaling physically does.

Okay, but maybe that’s a rather philosophical misgiving. Here is a more concrete one. If the previous eon leaves information imprinted in the next one, then it isn’t obvious that the cycles repeat in the same way. Instead, I would think, they will generally end up with larger and larger fluctuations that will pass on larger and larger fluctuations to the next eon because that’s a positive feedback. If that was so, then Penrose would have to explain why we are in a universe that’s special for not having these huge fluctuations.

Another issue is that it’s not obvious you can extend these cosmologies back in time indefinitely. This is a problem also for “eternal inflation.” Eternal inflation is eternal really only into the future. It has a finite past. You can calculate this just from the geometry. In a recent paper Kinney and Stein showed that this is also the case for a model of cyclic cosmology put forward by Ijjas and Steinhard has the same problem. The cycle might go on infinitely, alright, but only into the future not into the past. It’s not clear at the moment whether this is also the case for conformal cyclic cosmology. I don’t think anyone has looked at it.

Finally, I am not sure that CCC actually solves the problem it was supposed to solve. Remember we are trying to explain the past hypothesis. But a scientific explanation shouldn’t be more difficult than the thing you’re trying to explain. And CCC requires some assumptions, about the conformal invariance and the erebons, that at least to me don’t seem any better than the past hypothesis.

Having said that, I think Penrose’s point that the Weyl curvature in the early universe must have been small is really important and it hasn’t been appreciated enough. Maybe CCC isn’t exactly the right conclusion to draw from it, but it’s a mathematical puzzle that in my opinion deserves a little more attention.