Even though the sensitivity of dark matter detectors has improved by more than five orders of magnitude since the early 1980s, all results so far are compatible with zero events. The searches for axions, another popular dark matter candidate, haven’t fared any better. Coming generations of dark matter experiments will cross into the regime where the neutrino background becomes comparable to the expected signal. But, as a colleague recently pointed out to me, this merely means that the experimentalists have to understand the background better.

Maybe in 100 years they’ll still sit in caves, deep underground. And wait.

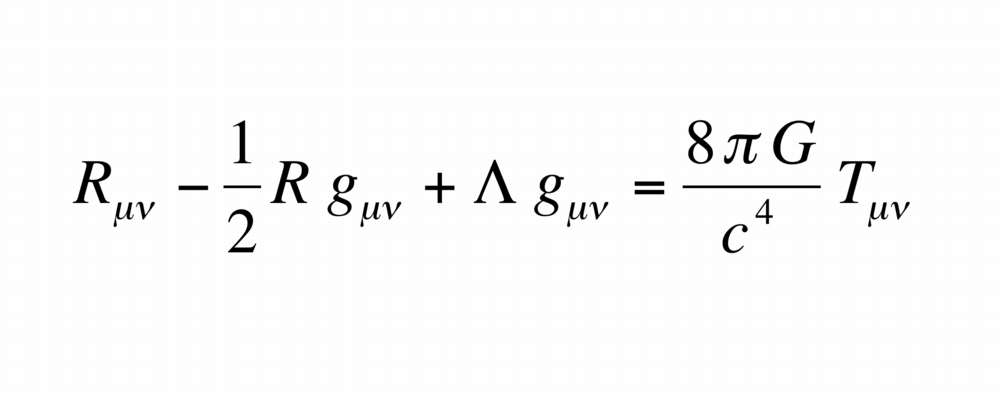

Meanwhile others are running out of patience. Particle dark matter is a great explanation for all the cosmological observations that general relativity sourced by normal matter cannot explain. But maybe it isn’t right after all. The alternative to using general relativity and adding particle is to modify general relativity so that space-time curves differently in response to the matter we already know.

Already in the mid 1980s, Modehai Milgrom showed that modifying gravity has the potential to explain observations commonly attributed to particle dark matter. He proposed Modified Newtonian Dynamics – short MOND – to explain the galactic rotation curves instead of adding particle dark matter. Intriguingly, MOND, despite it having only one free parameter, fits a large number of galaxies. It doesn’t work well for galaxy clusters, but this clearly shows that many galaxies are similar in very distinct ways, ways that the concordance model (also known as LambdaCDM) hasn’t been able to account for.

In its simplest form the concordance model has sources which are collectively described as homogeneous throughout the universe – an approximation known as the cosmological principle. In this form, the concordance model doesn’t predict how galaxies rotate – it merely describes the dynamics on supergalactic scales.

To get galaxies right, physicists have to also take into account astrophysical processes within the galaxies: how stars form, which stars form, where do they form, how do they interact with the gas, how long do they live, when and how they go supernova, what magnetic fields permeate the galaxies, how the fields affect the intergalactic medium, and so on. It’s a mess, and it requires intricate numerical simulations to figure out just exactly how galaxies come to look how they look.

And so, physicists today are divided in two camps. In the larger camp are those who think that the observed galactic regularities will eventually be accounted for by the concordance model. It’s just that it’s a complicated question that needs to be answered with numerical simulations, and the current simulations aren’t good enough. In the smaller camp are those who think there’s no way these regularities will be accounted for by the concordance model, and modified gravity is the way to go.

In a recent paper, McGaugh et al reported a correlation among the rotation curves of 153 observed galaxies. They plotted the gravitational pull from the visible matter in the galaxies (gbar) against the gravitational pull inferred from the observations (gobs), and find that the two are closely related.

|

| Figure from arXiv:1609.05917 [astro-ph.GA] |

This correlation – the mass-discrepancy-acceleration relation (MDAR) – so they emphasize, is not itself new, it’s just a new way to present previously known correlations. As they write in the paper:

“[This Figure] combines and generalizes four well-established properties of rotating galaxies: flat rotation curves in the outer parts of spiral galaxies; the “conspiracy” that spiral rotation curves show no indication of the transition from the baryon-dominated inner regions to the outer parts that are dark matter-dominated in the standard model; the Tully-Fisher relation between the outer velocity and the inner stellar mass, later generalized to the stellar plus atomic hydrogen mass; and the relation between the central surface brightness of galaxies and their inner rotation curve gradient.”But this was only act 1.

In act 2, another group of researchers responds to the McGaugh et al paper. They present results of a numerical simulation for galaxy formation and claim that particle dark matter can account for the MDAR. The end of MOND, so they think, is near.

|

| Figure from arXiv:1610.06183 [astro-ph.GA] |

McGaugh, hero of act 1, points out that the sample size for this simulation is tiny and also pre-selected to reproduce galaxies like we observe. Hence, he thinks the results are inconclusive.

In act 3, Mordehai Milgrom, the original inventor of MOND – posts a comment on the arXiv. He also complains about the sample size of the numerical simulation and further explains that there is much more to MOND than the MDAR correlation. Numerical simulations with particle dark matter have been developed to fit observations, he writes, so it’s not surprising they now fit observations.

“The simulation in question attempt to treat very complicated, haphazard, and unknowable events and processes taking place during the formation and evolution histories of these galaxies. The crucial baryonic processes, in particular, are impossible to tackle by actual, true-to-nature, simulation. So they are represented in the simulations by various effective prescriptions, which have many controls and parameters, and which leave much freedom to adjust the outcome of these simulations [...]In act 4, another paper with results of a numerical simulation for galaxy structures with particle dark matter appears.

The exact strategies involved are practically impossible to pinpoint by an outsider, and they probably differ among simulations. But, one will not be amiss to suppose that over the years, the many available handles have been turned so as to get galaxies as close as possible to observed ones.”

This one uses a code with acronym EAGLE, for Evolution and Assembly of GaLaxies and their Environments. This code has “quite a few” parameters, as Aaron Ludlow, the paper’s first author told me, and these parameters have been optimized to reproduce realistic galaxies. In this simulation, however, the authors didn’t use this optimized parameter configuration but let several parameters (3-4) vary to produce a larger set of galaxies. These galaxies in general do not look like those we observe. Nevertheless, the researchers find that all their galaxies display the MDAR correlation, regardless.

This would indicate that the particle dark matter is enough to describe the observations.

|

| Figure from arXiv:1610.07663 [astro-ph.GA] |

However, even when varying some parameters, the EAGLE code still contains parameters that have been fixed previously to reproduce observations. Ludlow calls them “subgrid parameters,” meaning they quantify physics on scales smaller than what the simulation can presently resolve. One sees for example in Figure 1 of their paper (shown below) that all those galaxies have a pronounced correlation between the velocities of the outer stars (Vmax) and the luminosity (M*) already.

|

| Figure from arXiv:1610.07663 [astro-ph.GA] Note that the plotted quantities are correlated in all data sets, though the off-sets differ somewhat. |

One shouldn’t hold this against the model. Such numerical simulations are done for the purpose of generating and understanding realistic galaxies. Runs are time-consuming and costly. From the point of view of an astrophysicist, the question just how unrealistic galaxies can get in these simulations is entirely nonsensical. And yet that’s exactly what the modified-gravity/dark matter showoff now asks for.

In act 5, John Moffat shows that modified gravity – the general relativistic completion of MOND – reproduces the MDAR correlation, but also predicts a distinct deviation for the very outside stars of galaxies.

|

| Figure from arXiv:1610.06909 [astro-ph.GA] The green curve is the prediction from modified gravity. |

The crucial question here is, I think, which correlations are independent of each other. I don’t know. But I’m sure there will be further acts in this drama.